AI will not replace HR. Instead, it will force HR leaders to build hybrid workplaces where humans and AI systems work together.

The organizations that win will be those that use AI to automate routine tasks while protecting psychological safety, fairness, and dignity—and equipping managers to lead teams of humans and AI agents side by side.

Executives, media outlets, and HR technology vendors continue to push the narrative that artificial intelligence is about to transform Human Resources. The message is persuasive: automation will streamline hiring, performance management, payroll, and employee support.

Yet these predictions treat HR as a set of transactions rather than a discipline built on trust, empathy, fairness, and communication.

Real HR challenges rarely arise because teams lack tools. They arise because humans experience fear, uncertainty, miscommunication, and emotional strain in ways that cannot be reduced to structured data or predictable rules.

AI is powerful and already reshaping workflows. But when organizations implement AI without human oversight, communication, and limits, psychological safety deteriorates. Employees wonder whether machines are judging their value. Leaders assume algorithmic output equals truth. And distrust spreads quietly.

The future does not belong to organizations that automate fastest. It belongs to those that integrate AI thoughtfully while protecting human dignity.

The Hype vs. the Reality of AI in HR

AI adoption in HR is accelerating because executives face rising cost pressure, turnover concerns, and compliance complexity. Automation appears to offer a rapid solution.

Recruiting platforms promise “bias-free screening.” Performance management systems promote algorithmic fairness. Vendors imply that HR processes can run themselves.

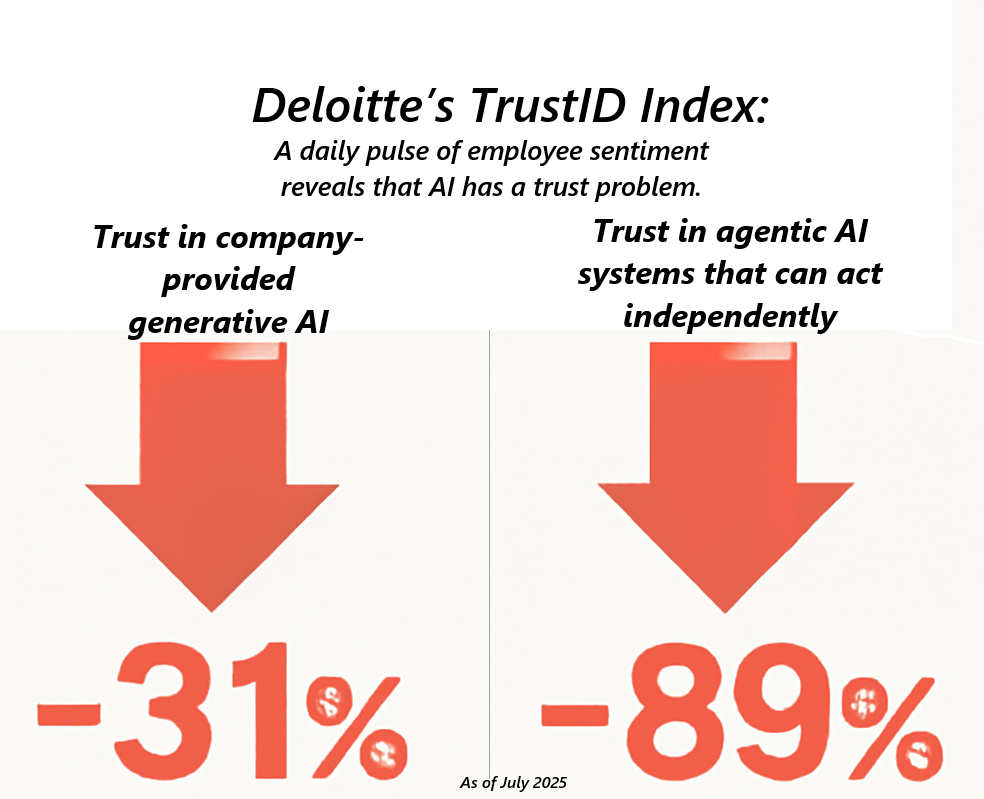

However, surveys show that employees are uncertain about how AI affects their work, and many lack clear guidelines for responsible use. In some organizations, workers use AI privately, fearing judgment or unclear policies. This indicates a trust gap—one that HR must close intentionally.

What AI Does Well in HR

When used appropriately, AI reduces administrative burden and enables HR teams to spend more time resolving interpersonal conflict, coaching managers, and building culture. AI is most helpful when it produces starting points—not final decisions.

AI delivers value where tasks are structured and high-volume. Examples include:

- screening hundreds of resumes for required technical skills

- flagging payroll anomalies against schedules

- summarizing multi-source feedback to identify patterns

- generating draft language for consistent communications

Where AI Fails—and Why Trust Erodes

Employees experience anxiety when AI influences decisions about:

performance evaluations

disciplinary action

hiring and promotion

pay equity

Because AI systems learn from historical patterns, they risk replicating organizational bias. And machines cannot understand context, emotion, or lived experience—especially in cases involving illness, caregiving, identity, conflict, trauma, or discrimination.

For Example:

Imagine a system flagging an employee as a “turnover risk” because they turned off their camera, missed deadlines, and sent fewer messages. Without human inquiry, management may assume disengagement or lack of commitment. However, this employee may be navigating cancer treatment and using leave discreetly. The algorithm observes behavior, not meaning.

Psychological safety is damaged when AI outputs are treated as truth rather than signals for human conversation.

Psychological Safety in an Automated Workplace

Psychological safety means that employees feel safe speaking up without fear that doing so will result in embarrassment, punishment, or negative consequences. In simple terms, it is the belief that you will not be harmed—professionally or socially—for asking questions, raising concerns, or admitting mistakes.

Historically, psychological safety has referred to interpersonal interactions among humans: employees feel comfortable telling their manager they are overwhelmed, pushing back on a deadline, or reporting unethical behavior. In traditional teams, psychological safety is shaped through tone, relationships, and trust built over time.

Automation introduces new risks because AI tools—by nature—operate invisibly and often without clear explanation. When employees do not know how systems are scoring them, what data is being collected, or whether automated insights feed into major decisions (like performance reviews, layoffs, or promotions), uncertainty increases. That uncertainty can create fear and silence.

Why does silence matter? Because silence hides problems until they become crises.

In an automated workplace, psychological safety means that employees believe:

- they can question automated recommendations without looking resistant

- no disciplinary action or retaliation will result from identifying errors in AI output

- they can ask for clarification when AI reports or dashboards show unfamiliar metrics

- decisions about their employment will include human review and context

- raising concerns about fairness, privacy, or bias will be taken seriously

This matters because algorithms make mistakes. If employees do not feel safe challenging those mistakes, the organization will not catch errors early. Over time, employees withdraw emotionally, which increases turnover risk and weakens culture.

In other words, psychological safety becomes a form of resilience in organizations using AI. It preserves a sense of fairness and belonging even in highly automated environments. Without it, automation undermines trust—and trust is the foundation on which engagement, innovation, and retention depend.

Employees stay engaged when they feel:

- respected and heard

- evaluated fairly

- included in decisions impacting their work

- safe to ask questions and challenge assumptions

AI-driven systems threaten this safety if workers suspect invisible surveillance, secret scoring models, or automated determinations.

HR’s responsibility expands in an AI era: protect fairness and dignity by ensuring transparency, appeal mechanisms, and communication that clarifies where AI is used, why, and how decisions remain human-led.

Hybrid Human–AI Teams: What Work Will Really Look Like

Future teams will include:

- human employees

- AI assistants/co-pilots

- automated task systems

AI may handle workflows like triaging employee questions or monitoring compliance, while humans handle judgment, conflict resolution, and relationship management.

This does not eliminate HR. It elevates HR into a governance, ethical leadership, and cultural stewardship role.

Picture a sales operations team in 2029. The team includes five human employees and three AI agents—digital coworkers built into the company’s internal systems. One AI agent drafts sales proposals, another monitors real-time client sentiment signals generated from communication patterns, and a third supports customer onboarding by detecting missing compliance documents.

During the morning stand-up meeting, each AI agent appears as a participant tile on the video call. Their contributions come through chat summaries and visual dashboards, not spoken dialogue. For example, the AI compliance assistant posts: “Three new onboarding risks identified. Recommending escalation for Account 5521.”

A human employee rolls their eyes. The AI "flagged" this account last week because it failed to recognize that the missing item was waived for government entities. Everyone now understands that AI-generated recommendations require context and verification, just like feedback from a junior analyst. It is not malicious; it is limited. But the annoyance is real.

Later that afternoon, a manager is reviewing quarterly performance data assisted by the analytical AI. The system suggests that employee turnover risk has increased for two specialists. In reality, the “risk” flag simply reflected vacation schedules and temporary dips in processing volume. The manager makes a note to address the issue not by penalizing anyone, but by meeting with each specialist to check in about workload and wellbeing.

Even highly capable AI cannot interpret the emotional landscape, interpersonal dynamics, or context of human lives. Humans will still need to ask what the data means—not just what the numbers say.

The workplace begins to resemble a hybrid ecosystem: humans collaborating with AI systems not as neutral tools, but as contributors that affect workflows, expectations, and relationships. Just like coworkers, AI systems produce work that others rely upon. And just like coworkers, they sometimes misunderstand priorities or make incorrect assumptions.

In this emerging workplace model, HR will be indispensable—not only as a compliance function, but as a translator between human concerns and automated decision systems. HR will be the AI sherpa guides for the new workplace, helping employees navigate when to trust AI insights, when to verify them, and how to escalate concerns when automation appears to contradict fairness or common sense.

Hybrid teams will require new etiquettes and norms: acknowledging AI suggestions in meetings, documenting overrides, and clarifying why human judgment prevailed. These conversations will be uncomfortable at first. But they will be critical for preserving psychological safety and ensuring that AI augments human judgment rather than replacing it.

The organizations that succeed will not be the ones that eliminate the most positions through automation. They will be the ones whose employees feel confident collaborating with AI and trust that human leaders remain accountable for ultimate decisions.

"HR will be the AI sherpa guides for the new workplace..."

Practical Framework: What Should AI Do vs. Humans?

Ask three questions before automating any HR workflow:

1. Is the task rules-based or judgment-based?

AI is appropriate when outcomes are determined by objective rules.

Humans are required when factors vary based on context.

Example:

AI can detect timecard anomalies, but only human inquiry can determine whether they signal disengagement or personal hardship.

2. Does the task impact fairness, identity, or dignity?

Promotions, pay, and corrective action require human judgment.

AI outputs should be advisory, not determinative.

Employees attach deep meaning to decisions that reflect worth, contribution, or standing inside the organization. When AI systems influence outcomes in these areas without transparent human review, trust can erode quickly.

Example:

An AI system analyzes quarterly performance review text and productivity metrics, ranking employees for bonus eligibility. It recommends bypassing an employee who took protected medical leave earlier in the year, incorrectly interpreting reduced hours as diminished performance.

If a manager accepts this recommendation at face value, the organization unintentionally penalizes the employee for circumstances outside their control. That decision harms dignity and undermines perceptions of fairness—even if the harm was accidental.

The AI report can still be valuable; it surfaces data efficiently. But the final decision must include human review and context. A manager might conclude that despite lower hours, the employee demonstrated exceptional problem-solving and maintained strong client relationships. Recognizing that nuance preserves fairness and prevents injustice that an algorithm cannot see.

AI can support equitable compensation and promotion decisions, but it cannot substitute for human responsibility to ensure those decisions reflect legal and ethical obligations.

3. Is emotion involved?

If the task requires empathy, reassurance, or discretion, AI should never be the first messenger.

Many HR conversations require empathy, reassurance, and space for individuals to be heard. Emotional nuance is especially important in matters involving conflict, health, family obligations, discipline, or job security. Automated communication in these contexts can feel cold, dismissive, or threatening—even when the content itself is neutral.

Example:

An attendance monitoring system automatically detects a pattern of late arrivals and drafts an email stating that corrective action will be taken if punctuality does not improve. If the email is sent automatically, the employee receives the message abruptly while caring for an ill parent and struggling with unpredictable transportation.

Receiving a disciplinary communication without a prior conversation can trigger defensiveness, shame, or fear. The employee may withdraw or resign not because of the attendance issue, but because they feel unseen and reduced to a data point.

By contrast, if AI prepares a report and a draft message for the manager to review and personalize, the manager can first request a conversation:

“I noticed inconsistencies in attendance recently. Before we move forward, I’d like to understand what is happening and whether there are ways we can support you.”

The facts remain the same, but the experience for the employee is profoundly different. AI identifies signals; humans provide compassion and judgment.

Preparing Managers to Lead Hybrid Teams

Managers must gain skills in:

- Engineering prompts that are effective, and do not violate compliance guardrails

- explaining how workplace AI tools work

- identifying when to override AI recommendations

- recognizing when employees feel monitored or judged

- modeling inquiry before assumption

Practical scenario:

An AI candidate ranking tool suggests hiring Candidate B. A manager trained in AI-assisted leadership might say:

“We’ll treat this as one source of insight. Let’s review Candidate A and Candidate C again, because algorithms sometimes undervalue non-linear career paths that reveal grit, adaptability, or leadership.”

The tool becomes a prompt for better decisions—not a shortcut to avoid thinking.

Governance and HR Policy Requirements

Organizations must establish:

- transparency expectations about AI use

- escalation and appeal procedures

- documentation when managers override AI recommendations

- periodic fairness audits

- data minimization and retention controls

- psychological safety measurement frameworks

HR—not IT—should lead these policy decisions because they affect employee rights and culture.

**A Word About Compliance and Legal Risk - Why This Must Be Central in AI Governance and MUST come from HR**

There is a growing risk that poorly governed AI tools will expose employers to liability, particularly in the areas of discrimination, employee privacy, and recordkeeping. AI systems operate on training data and statistical inferences that may unintentionally reinforce historical patterns of disadvantage. If an algorithm systematically scores certain demographic groups lower on performance potential, attrition risk, cultural fit, or promotability, the organization may be liable for disparate impact discrimination—even if no human intended bias to occur.

Employment laws have always held employers responsible for their employment practices. Courts and regulators will not accept “the algorithm made me do it” as a defense. The Equal Employment Opportunity Commission has already issued guidance warning employers that AI-driven screening and evaluation tools must comply with Title VII standards for nondiscrimination. Several states, including Illinois and New York, have passed or proposed laws requiring auditability, transparency, and employee notification when automated decision systems are used in hiring or evaluation.

That means employers must be able to demonstrate how automated tools operate, how they are validated, and how decisions influenced by AI systems are reviewed by humans. Documentation such as recorded manager overrides, audit logs, and bias mitigation actions will be critical evidence if the organization’s processes are challenged.

Similarly, data minimization and retention controls are no longer theoretical compliance issues. Many AI-driven HR tools collect behavioral metadata—such as when employees respond to messages, how quickly they complete tasks, or whether productivity patterns fluctuate. Without clear rules restricting what is collected, how long it is retained, and how it is used, employers risk violating federal, state, and international data privacy laws, including biometric and profiling requirements.

Escalation and appeal procedures must be implemented because denying employees an avenue to contest automated outcomes can constitute procedural unfairness, especially if automated scoring influences pay, discipline, or advancement. HR must treat algorithmic recommendations the same way it treats human recommendations: review, validate, document, and correct when necessary.

Fairness audits and psychological safety measurement frameworks are not optional add-ons; they are part of demonstrating due diligence. A quarterly audit process should examine whether automated systems produce patterns of disadvantage for employees in protected classes or disproportionately penalize workers with caregiving obligations, disabilities, or temporary medical needs.

For these reasons, governance cannot be delegated to IT alone. IT understands systems; HR understands people, risk, and legal obligations. Because AI changes the balance of power between employee and employer and influences employment outcomes, HR must lead policy development, direct audits, and establish the social norms that preserve trust. IT and vendors are implementation partners, but HR is the owner and steward of employee rights in an automated workplace.

Why Outsourced HR Services Will Be Critical

Internal HR teams are stretched thin and rarely staffed with AI governance expertise. An outsourced HR partner can provide objective insight, policy development, and training for hybrid workforce transitions.

To further the point, as AI becomes more prevalent in companies and workplaces, HR will increasingly be viewed as overhead. Businesses will eliminate or gut their HR departments. This type of knee-jerk reaction to save personnel costs will eventually backfire and organizations will need to outsource human resources to fix AI driven problems. Most traditional HR practitioners will likely lag behind in this skill area.

My Virtual HR Director was virtual before 'virtual' was a thing. We have been on the cutting edge of technology and best practices for nearly 20 years. We are already pivoting into the AI economy and leveraging our human experience and knowledge to help companies make the quantum leap to artificially intelligent employees.

USE CASE: Meet Sensay

You're going to love this...

Sensay is an AI-powered knowledge transfer and onboarding platform designed to preserve what employees know before they leave the organization and make that knowledge available to new hires on day one. Instead of relying on job shadowing, tribal knowledge, or incomplete documentation, Sensay uses AI interview prompts and conversational capture to extract expertise from outgoing employees and convert it into a searchable “digital expert” that new employees can interact with.

The result is faster onboarding, smoother handoffs, and reduced knowledge loss during turnover, retirement, or extended leave. Sensay does not replace HR—it strengthens HR’s ability to protect institutional knowledge and create consistent learning experiences across the workforce.

How AI Tools Like Sensay Support Responsible Knowledge Transfer and Onboarding

Organizations today face a critical but under-discussed challenge: when experienced employees retire, resign, or take extended leave, they take years of tacit knowledge with them. Documentation rarely captures “how the work actually gets done” – the intuition, shortcuts, and mental models that experts develop over decades.

Sensay is an emerging AI platform specifically designed to solve this knowledge drain risk by capturing expert knowledge during offboarding and making it accessible to new hires in onboarding. Their recent showcase demonstrated how the AI conducts structured interviews, extracts tacit knowledge, organizes it into a searchable knowledge base, and deploys it directly into collaboration tools like Teams or Slack.

This capability changes onboarding and offboarding by transforming knowledge into a living, continuously accessible asset rather than a one-time transfer.

How Sensay works in practice

Using a departing employee as an example, Sensay guides HR and managers through a process that includes:

- creating a tailored interview based on job title, duties, and custom prompts

- prompting the departing employee with expert-level interview questions via “Sophia,” their conversational AI

- conducting a voice-based, natural interview capturing workflows, tips, exceptions, undocumented processes, and tacit knowledge

- chunking the responses into structured knowledge that can be queried

- deploying the resulting knowledge base into communication platforms

The transcript demonstrates Sophia walking an employee through the interview, capturing insight into tasks, unwritten workflows, troubleshooting steps, and reasoning that normally disappears after exit.

It also shows new hire Sally learning the job independently “from day one” through a chatbot mentor instead of relying exclusively on shadowing a coworker.

This illustrates Sensay’s core promise: onboarding with less dependency on internal bandwidth and fewer context gaps for new employees.

Why HR remains essential for success

Although Sensay automates key elements of knowledge capture, HR plays indispensable roles in governance, fairness, and organizational adoption. The company itself explains that Sensay does not replace HR; instead, HR administrators configure, oversee, and ensure the integrity of the knowledge transfer process.

HR retains responsibility for:

- selecting which roles and exits require knowledge capture

- setting interview goals and ensuring coverage of culture, expectations, and risk signals

- identifying employees who may need accommodations or safe exit conversations

- screening collected knowledge for confidentiality concerns

- developing policy for who can query the knowledge base and how

Just because a digital knowledge base exists does not mean all employees should have unrestricted access to client details, strategic reasoning, regulated data, or protected leave history. Sensay does not absolve HR of confidentiality or compliance duties.

Legal + compliance considerations for Sensay adoption

As with all AI augmentation in HR, employers must proactively address:

- confidentiality of employee and company information

- SOC2, GDPR, ISO and data governance (which Sensay is building toward)

- disparate impact risks if knowledge extraction leads indirectly to biased evaluation

- whether captured employee content becomes personnel file material

- retention, deletion, and access policies

- classification of recorded interviews under state wiretap consent requirements

AI knowledge capture introduces new compliance surfaces. HR—not IT—must implement policy and align usage to employment law to avoid inadvertent misuse of the knowledge base.

Strategic benefits HR can unlock using Sensay

The transcript articulates several use cases aligned with pressing organizational risks and HR mandates:

- retirement wave and demographic shifts accelerating knowledge loss

- macro trend of younger generations changing employers more frequently

- extended leaves, sabbaticals, and parental leave requiring continuity plans

- mergers and acquisitions requiring rapid institutional knowledge preservation

- reduced onboarding cycles and reduced dependency on shadowing or tribal knowledge

These benefits are uniquely HR problems—not IT challenges—and therefore HR must lead AI-supported knowledge strategies.

How My Virtual HR Director can implement Sensay for organizations

My Virtual HR Director can serve as strategic integrator, not merely a software installer. Our implementation services may include:

- identifying knowledge-critical roles and processes

- integrating Sensay into offboarding policy workflows

- establishing fairness, consent, and confidentiality rules

- developing compliant retention + access controls

- creating “AI-assisted onboarding” SOPs

- training managers to avoid over-reliance on AI recommendations

- configuring appeal + correction processes if the AI’s knowledge base is incomplete or incorrect

- establishing measures for psychological safety in AI-enabled knowledge transfer

- auditing the knowledge base for bias, incomplete reasoning, or outdated steps

AI can accelerate knowledge transfer, but only when placed inside human-centered governance. HR remains accountable for meaning, fairness, and employee trust.

Sensay captures the “knowing,” but HR stewards the humans who must use that knowledge responsibly.

The Bottom Line

AI will reshape HR, but not by removing HR from the equation. Organizations that rush automation without human oversight will face disengagement, mistrust, and legal exposure. The organizations that thrive will protect dignity and fairness, communicate transparently, and equip leaders to collaborate with AI responsibly.

AI can accelerate processing. Humans protect psychological safety and fairness. The future of HR is hybrid—and deeply human-centered.

Further Reading:

-

SHRM – What Psychological Safety Really Means in the Workplace

Explore foundational explanations of psychological safety and common myths workplaces must address. SHRM: What Psychological Safety Really Means in the Workplace -

Gallup – AI Use at Work Continues to Rise

Data on growing adoption of AI across workplaces and how employee familiarity with AI is evolving. Gallup: AI Use at Work Rises -

Workday – AI Trust Gap in the Workforce

Research highlighting the divide between leadership enthusiasm for AI and employee trust, underscoring the need for clear policies and training. Workday: Closing the AI Trust Gap -

KPMG – Trust, Attitudes and Use of Artificial Intelligence (Global Study 2025)

A global report on public and workplace attitudes toward AI, including trust levels, risks, and governance considerations. KPMG: Trust, Attitudes and Use of AI – Global Study 2025 -

APA – Psychological Safety in the Changing Workplace

Insights on how psychological safety impacts employee well-being and organizational outcomes. APA: Psychological Safety in the Changing Workplace - Sensay - Sensay.io